Turning 3D Data into Tools for Conservation

As part of the production team, our job is to take all the data collected by the field team and turn it into useful products for our various stakeholders. Over the last 6 months we have been doing a lot to update our production pipeline to produce a variety of outputs that enable the conservation, recovery and discovery of cultural heritage. As part of providing more transparency in our methods, we wanted to highlight some of the conservation outputs we generated from our recent work at the site of Bagan, Myanmar. We captured a number of monuments on this most recent trip to Bagan and were fortunate enough to return to Eim Ya Kyaung, a temple we had also documented before the earthquake. In order to support the conservation of the monument, we produced a set of standard architectual drawings from our data.

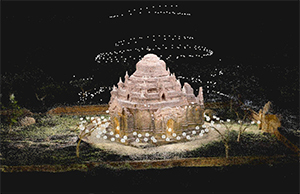

For Eim Ya Kyaung we started with our standard LiDAR, terrestrial photogrammetry and aerial images inputs:

- 409 Aerial images shot with DJI Phantom 4 Pro in Point of Interest mode.

- 3055 Ground photogrammetry images shot using a GigaPan robotic head.

- 1 e57 PointCloud (approx.. 106 scans)

All data was processed over a 3 weeks period. We used Faro Scene to register and process the scans, and generate the e57 Point Cloud.

All photogrammetry images were shot as bracketed HDR, and were processed using Lightroom, XRite, and Photomatix. The images and the scans were then brought into Reality Capture. We grouped images into Interior, Exterior, and Aerial groups and aligned into individual components. All were then further combined with the point cloud using ground Control Points in Reality Capture. A high resolution model was reconstructed primarily from the LSPs, plus some of the Aerial images to ensure definition on the roof.

The Mesh was simplified to the desired overall target polygonal-count in RC, and cleaned-up using Autodesk Remake. It was then split into various chunks using the Reconstruction Box feature in RC, so that each could be unwrapped individually. (Overlap was preserved between chunks to prevent texturing issues) All models were then textured in RC and exported for final editing and re-assembly inside Autodesk Maya. All the subsequent refining work, which included removing overlapping geometry and ensuring coverage and consistency of colors across all textures and border edges was done using RC, Autodesk Maya, Mudbox, and Remake.

An .fbx file of the environment was generated in Maya and later re-imported inside Reality Capture to produce Ortho Tiffs using the built in tool.

The Ortho tiffs were then used to generate architectural drawings which were sent to the site authorities and conservation team. These detailed drawings are essential in the assessment of the structure post earthquake and will be used to guide any conservation and stabilization efforts on site.